Talend:FAQ: Difference between revisions

No edit summary |

No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 6: | Line 6: | ||

==Jobs Over 1 Million Records== | ==Jobs Over 1 Million Records (Personator Component Only)== | ||

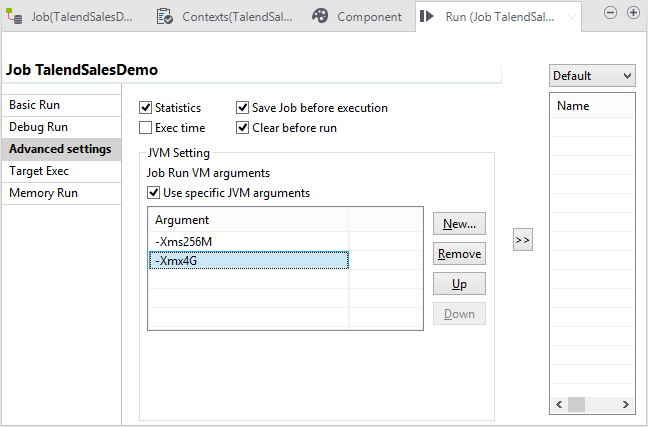

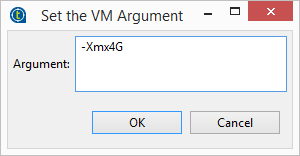

If you have a large job (anything over 1 million records) the last step before running the job is increasing heap memory. Click the "Use Specific JVM arguments" check box and double click the "–Xmx1024mb argument". Change this to <code>-Xmx4G</code> to ensure proper performance when processing very large jobs over 1 million records. | If you have a large job (anything over 1 million records) the last step before running the job is increasing heap memory. Click the "Use Specific JVM arguments" check box and double click the "–Xmx1024mb argument". Change this to <code>-Xmx4G</code> to ensure proper performance when processing very large jobs over 1 million records. | ||

| Line 20: | Line 20: | ||

This means your job is a large job and you need to increase the heap memory. Please see [[#Jobs Over 1 Million Records|Jobs Over 1 Million Records]] for more information. | This means your job is a large job and you need to increase the heap memory. Please see [[#Jobs Over 1 Million Records|Jobs Over 1 Million Records]] for more information. | ||

==NoClassDefFoundError: Integer== | |||

If you receive this error: | |||

<code>java.lang.NoClassDefFoundError: Integer</code> | |||

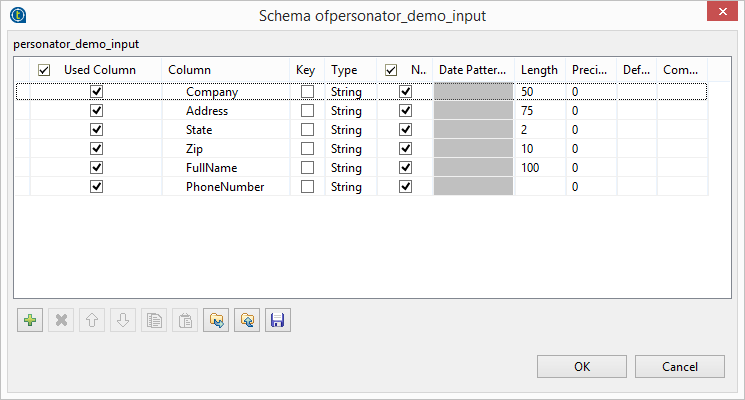

This means that one of your input columns is set to integer rather than string. To resolve this issue, please validate that the input schema are all set to string data types and try to run the job again. | |||

[[File:TALEND_NoClassDefFoundError.png|link=]] | |||

[[Category:FAQ]] | [[Category:FAQ]] | ||

[[Category:Talend]] | [[Category:Talend]] | ||

Latest revision as of 16:55, 9 March 2018

← Data Quality Components for Talend

System Requirements

We recommend a minimum of 8 Gb ram.

Jobs Over 1 Million Records (Personator Component Only)

If you have a large job (anything over 1 million records) the last step before running the job is increasing heap memory. Click the "Use Specific JVM arguments" check box and double click the "–Xmx1024mb argument". Change this to -Xmx4G to ensure proper performance when processing very large jobs over 1 million records.

Out of Memory Error

If you recieve this error:

java.lang.OutOfMemoryError: Java heap space

This means your job is a large job and you need to increase the heap memory. Please see Jobs Over 1 Million Records for more information.

NoClassDefFoundError: Integer

If you receive this error:

java.lang.NoClassDefFoundError: Integer

This means that one of your input columns is set to integer rather than string. To resolve this issue, please validate that the input schema are all set to string data types and try to run the job again.