This wiki is no longer being updated as of December 10, 2025.

|

MatchUp Hub:Establishing Benchmarks: Difference between revisions

No edit summary |

No edit summary |

||

| Line 10: | Line 10: | ||

The benchmark should be established running the sample data local with respect to the local installation, use the recommended default matchcode, and should not use available advanced options. | The benchmark should be established running the sample data local with respect to the local installation, use the recommended default matchcode, and should not use available advanced options. | ||

*[ftp://ftp.melissadata.com/SampleCodes/Current/MatchUp/mdBenchmarkData. | *[ftp://ftp.melissadata.com/SampleCodes/Current/MatchUp/mdBenchmarkData.zip MatchUp Benchmark Data] | ||

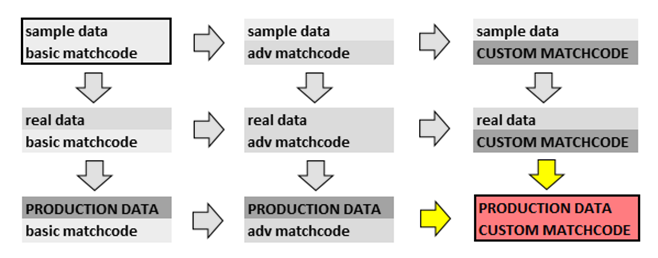

For MatchUp Object, we have provided [[MatchUp Hub:Library#Benchmarking|sample benchmarking scripts]]. For ETL solutions, benchmarks should use the following configured settings, which simulate the most basic usage of the underlying object. And also represents the first step in a Proof of Concept to Production migration…. | For MatchUp Object, we have provided [[MatchUp Hub:Library#Benchmarking|sample benchmarking scripts]]. For ETL solutions, benchmarks should use the following configured settings, which simulate the most basic usage of the underlying object. And also represents the first step in a Proof of Concept to Production migration…. | ||

Latest revision as of 18:54, 1 October 2018

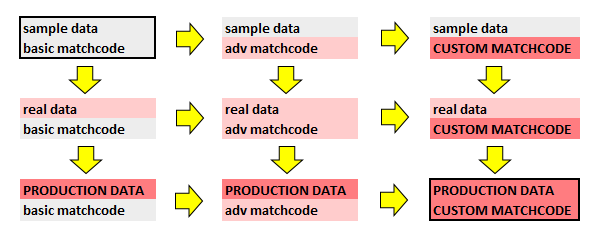

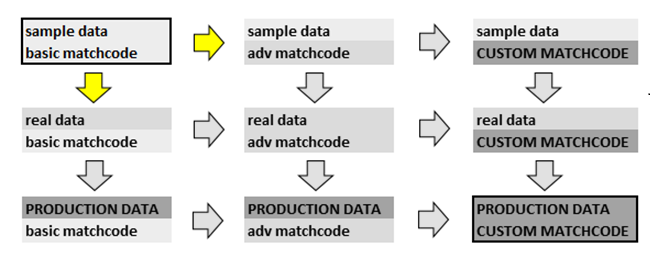

Migrating from Proof of Concept to Production Deployment

The purpose of the establishing a benchmark – before proceeding to live production data and custom matchcodes is to establish that the said requirements will be possible in your environmment.

For this reason, we have provided a 1 million record benchmarking file to establish that your environment will most likely not cause slow performance when moving forward. In each benchmarking case.

The benchmark should be established running the sample data local with respect to the local installation, use the recommended default matchcode, and should not use available advanced options.

For MatchUp Object, we have provided sample benchmarking scripts. For ETL solutions, benchmarks should use the following configured settings, which simulate the most basic usage of the underlying object. And also represents the first step in a Proof of Concept to Production migration….

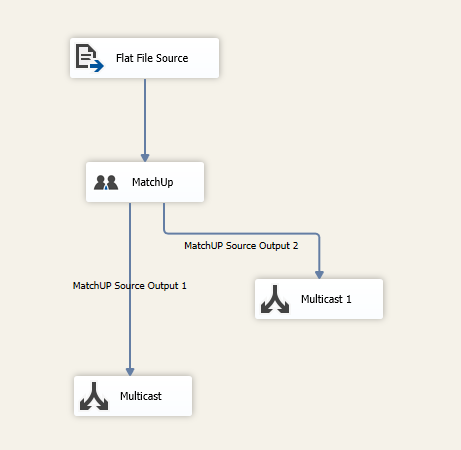

A simple file read, MatchUp keybuilding, deduping and output result stream will be established as the lone Data Flow operation…

All distributions of MatchUp provide the benchmark matchcode…

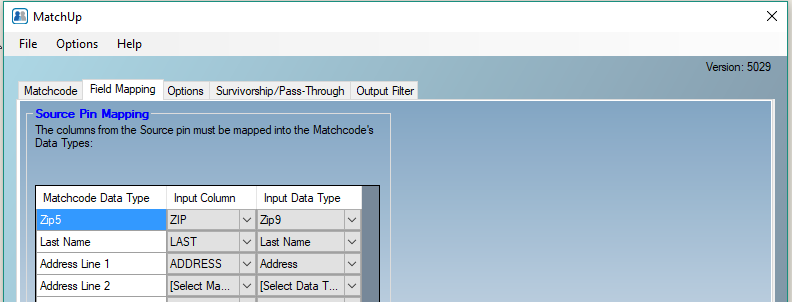

Basic input Field Mapping should be checked…

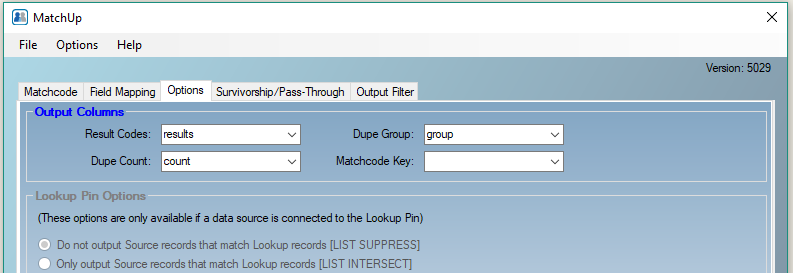

Force generation of important output properties compiled during a MatchUp process, although we’ll evaluate only the Result Code property to get Total record and Duplicate counts…

Although configuring Pass-Through options for all source data fields should have a negligible effect on our benchmarking, it should be noted that in actual production, Passing a large amount of source data and or Advanced Survivorship can slow down a process considerably….

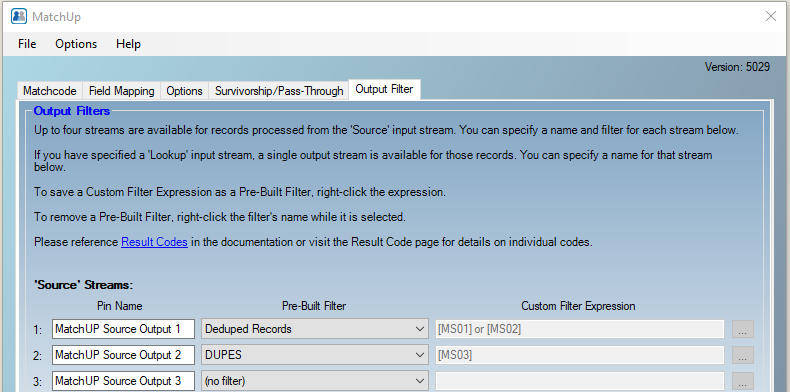

We’ll create two output streams that will allow us to tally the Total and Duplicate counts….

After the benchmark program has been configured and successfully run, you are ready to make small incremental steps…

If returned benchmark results do not closely resemble the expected benchmarks provided by Melissa Data, please fill and return the Benchmark form and return to Melissa Data. Completeness and additional comments provided can help eliminate current or potential performance issues when the process is scaled up to resemble a production process.